Understanding Performance Mysteries

An SQL text by Erland Sommarskog, SQL Server MVP. Last revision: 2026-01-25.

Copyright applies to this text. See here for font conventions used in this article.

This article is also available in Russian, translated by Dima Piliugin.

When I read various forums about SQL Server, I frequently see questions from deeply mystified posters. They have identified a slow query or slow stored procedure in their application. They take the SQL batch from the application and run it in SQL Server Management Studio (SSMS) to analyse it, only to find that the response is instantaneous. At this point they are inclined to think that SQL Server is all about magic. A similar mystery is when a developer has extracted a query in his stored procedure to run it stand-alone only to find that it runs much faster – or much slower – than inside the procedure.

No, SQL Server is not about magic. But if you don't have a good understanding of how SQL Server compiles queries and maintains its plan cache, it may seem so. Furthermore, there are some unfortunate combinations of different defaults in different environments that contribute to this confusion. In this article, I will try to straighten out why you get this seemingly inconsistent behaviour. I explain how SQL Server compiles a stored procedure, what parameter sniffing is and why it is part of the equation in the vast majority of these confusing situations. I explain how SQL Server uses the cache, and why there may be multiple entries for a procedure in the cache. Once you have come this far, you will understand how come the query runs so much faster in SSMS.

To understand how to address that performance problem in your application, you need to read on. I first make a short break from the theme of parameter sniffing to discuss a few situations where there are other reasons for the difference in performance. This is followed by two chapters on how to deal with performance problems where parameter sniffing is involved. The first is about gathering information. In the second chapter I discuss some scenarios – both real-world situations I have encountered and more generic ones – and possible solutions. Next comes a chapter where I discuss how dynamic SQL is compiled and interacts with the plan cache and why there are more reasons you may experience differences in performance between SSMS and the application with dynamic SQL. In the last full chapter I look at how you can use Query Store, a feature that was introduced in SQL 2016 for your troubleshooting. There are two more chapters, which are more like appendixes. The first discusses a feature known as PSP optimisation, introduced in SQL 2022, which is an attempt to reduce the performance impact of parameter sniffing. The second appendix discusses how temp-table caching can have effects similar to those of parameter sniffing. At the end, there is a section with links to Microsoft white papers and similar documents in this area.

Table of Contents

How SQL Server Compiles a Stored Procedure

How SQL Server Generates the Query Plan

Putting the Query Plan into the Cache

Different Plans for Different Settings

The Effects of Statement Recompile

Statement Recompile and Table Variables and Table-Valued Parameters

It's Not Always Parameter Sniffing...

Replacing Variables and Parameters

Getting Information to Solve Parameter-Sniffing Problems

Getting the Query Plans and Parameters with Management Studio

Getting the Query Plans and Parameters Directly from the Plan Cache

Getting the Most Recent Actual Execution Plan

Getting Query Plans and Parameters from a Trace

Getting Table and Index Definitions

Finding Information About Statistics

Examples of How to Fix Parameter-Sniffing Issues

The Case of the Application Cache

The Query Text is the Hash Key

The Significance of the Default Schema

Running Application Queries in SSMS

Finding Sniffed Parameter Values

Forcing Plans with Query Store

Setting Hints through Query Store

Appendix A: PSP Optimisation in SQL 2022

The essence of this article applies to all versions of SQL Server from SQL 2005 and on. The article includes several queries to inspect the plan cache. Beware that to run these queries you need to have the server-level permission VIEW SERVER PERFORMANCE STATE. (This permission was introduced in SQL 2022. On earlier versions, you will need the permissions VIEW SERVER STATE.) When a feature was introduced in a certain version or is only available in some editions of SQL Server, I try to mention this. On the other hand, I don't explicitly mention whether a certain feature or behaviour is available on Azure SQL Database or Azure SQL Managed Instance. Since these platforms are cutting-edge, you can assume that they support everything I discuss in this article. However, the names of some DMVs may be different on Azure SQL Database.

For the examples in this article, I use the Northwind sample database. This is an old demo database from Microsoft, which you find in the file Northwind.sql. (I have modified the original version to replace legacy LOB types with MAX types.)

This is not a beginner-level article, but I assume that the reader has a working experience of SQL programming. You don't need to have any prior experience of performance tuning, but it certainly helps if you have looked a little at query plans and if you have some basic knowledge of indexes, that is even better. I will not explain the basics in depth, as my focus is a little beyond that point. This article will not teach you everything about performance tuning, but at least it will be a start.

The majority of the screenshots and output in this article were collected with SSMS 18.8 against an instance of SQL Server running SQL 2019 CU8. If you use a different version of SSMS and/or SQL Server you may see slightly different results. I would however recommend that you use the most recent version of SSMS which you can download here.

In this chapter we will look at how SQL Server compiles a stored procedure and uses the plan cache. If your application does not use stored procedures, but submits SQL statements directly, most of what I say in this chapter is still applicable. But there are further complications with dynamic SQL, and since the facts about stored procedures are confusing enough, I have deferred the discussion on dynamic SQL to a separate chapter.

That may seem like a silly question, but the question I am getting at is: What objects have query plans on their own? SQL Server builds query plans for these types of objects:

With a more general and stringent terminology I should talk about modules, but since stored procedures is by far the most widely used type of module, I prefer to talk about stored procedures to keep it simple.

For other types of objects than the four listed above, SQL Server does not build query plans. Specifically, SQL Server does not create query plans for views and inline-table functions. Queries like:

SELECT abc, def FROM myview SELECT a, b, c FROM mytablefunc(9)

are no different from ad-hoc queries that access the tables directly. When compiling the query, SQL Server expands the view/function into the query, and the optimizer works with the expanded query text.

There is one more thing we need to understand about what constitutes a stored procedure. Say that you have two procedures, where the outer calls the inner one:

CREATE PROCECURE Outer_sp AS ... EXEC Inner_sp ...

I would guess most people think of Inner_sp as being independent from Outer_sp, and indeed it is. The execution plan for Outer_sp does not include the query plan for Inner_sp, only the invocation of it. However, there is a very similar situation where I've noticed that posters on SQL forums often have a different mental image, to wit dynamic SQL:

CREATE PROCEDURE Some_sp AS

DECLARE @sql nvarchar(MAX),

@params nvarchar(MAX)

SELECT @sql = 'SELECT ...'

...

EXEC sp_executesql @sql, @params, @par1, ...

It is important to understand that this is no different from nested stored procedures. The generated SQL string is not part of Some_sp, nor does it appear anywhere in the query plan for Some_sp, but it has a query plan and a cache entry of its own. This applies, no matter if the dynamic SQL is executed through EXEC() or sp_executesql.

Starting with SQL 2019, scalar user-defined functions have become a blurry case. In this version, Microsoft introduced inlining of scalar functions, which is a great improvement for performance. There is no specific syntax to make a scalar function inlined, but instead SQL Server decides on its own whether it is possible to inline a certain function. To confuse matters more, inlining does not happen in all contexts. For instance, if you have a computed column that calls a scalar UDF, inlining will not happen, even if the function as such qualifies for it. Thus, a scalar user-defined function may have a cache entry of its own, but you should first look at the plan for the query you are working with; you may find the logic for the function inside has been expanded into the plan. This is nothing we will discuss further in this article, but I wanted to mention it here to get our facts straight.

When you enter a stored procedure with CREATE PROCEDURE (or CREATE FUNCTION for a function or CREATE TRIGGER for a trigger), SQL Server verifies that the code is syntactically correct, and also checks that you do not refer to non-existing columns. (But if you refer to non-existing tables, it lets you get away with it, due to a misfeature known as deferred named resolution.) However, at this point SQL Server does not build any query plan, but merely stores the query text in the database.

It is not until a user executes the procedure, that SQL Server creates the plan. For each query, SQL Server looks at the distribution statistics it has collected about the data in the tables in the query. From this, it makes an estimate of what may be the best way to execute the query. This phase is known as optimisation. While the procedure is compiled in one go, each query is optimised on its own, and there is no attempt to analyse the flow of execution. This has a very important ramification: the optimizer has no idea about the run-time values of variables. However, it does know what values the user specified for the parameters to the procedure.

Consider the Orders table in the Northwind database, and these three procedures:

CREATE PROCEDURE List_orders_1 AS SELECT * FROM Orders WHERE OrderDate > '20000101' go CREATE PROCEDURE List_orders_2 @fromdate datetime AS SELECT * FROM Orders WHERE OrderDate > @fromdate go CREATE PROCEDURE List_orders_3 @fromdate datetime AS DECLARE @fromdate_copy datetime SELECT @fromdate_copy = @fromdate SELECT * FROM Orders WHERE OrderDate > @fromdate_copy go

Note: Using SELECT * in production code is bad practice. I use it in this article to keep the examples concise.

Then we execute the procedures in this way:

EXEC List_orders_1 EXEC List_orders_2 '20000101' EXEC List_orders_3 '20000101'

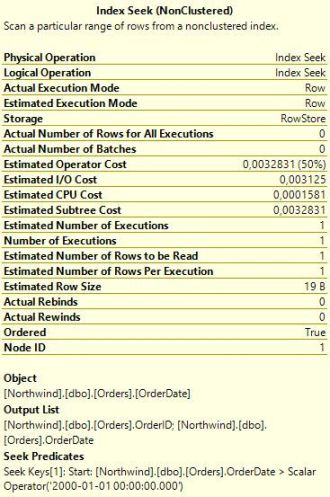

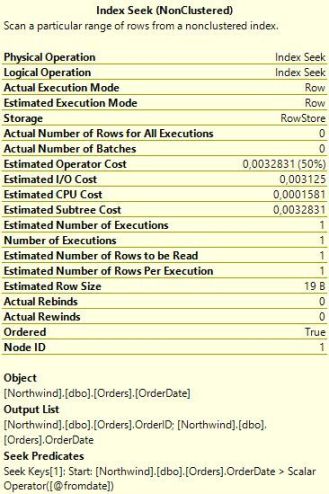

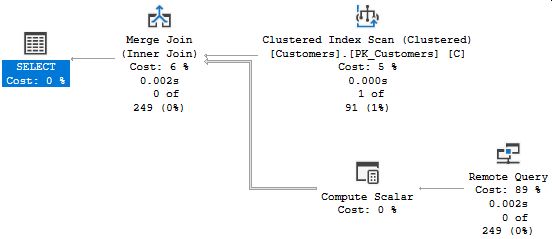

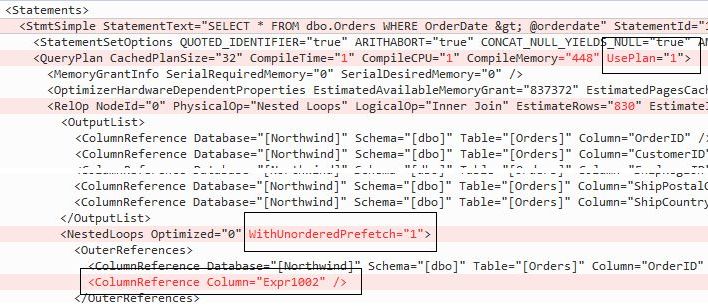

Before you run the procedures, enable Include Actual Execution Plan under the Query menu. (There is also a toolbar button and Ctrl-M is the normal keyboard shortcut.) If you look at the query plans for the procedures, you will see the first two procedures have identical plans:

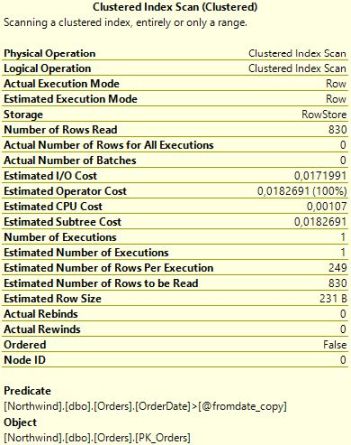

That is, SQL Server seeks the index on OrderDate, and uses a key lookup to get the other data. The plan for the third execution is different:

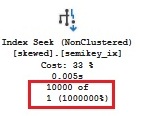

In this case, SQL Server scans the table. (Keep in mind that in a clustered index the leaf pages contain the data, so a clustered index scan and a table scan is essentially the the same thing.) Why this difference? To understand why the optimizer makes certain decisions, it is always a good idea to look at what estimates it is working with. If you hover with the mouse over the two Seek operators and the Scan operator, you will see the pop-ups similar to those below.

|

|

| List_orders_1 | List_orders_2 |

|

|

| List_orders_3 | |

The interesting element is Estimated Number of Rows Per Execution. For the first two procedures, SQL Server estimates that one row will be returned, but for List_orders_3, the estimate is 249 rows. This difference in estimates explains the different choice of plans. Index Seek + Key Lookup is a good strategy to return a smaller number of rows from a table. But when more rows match the seek criteria, the cost increases, and there is an increased likelihood that SQL Server will need to access the same data page more than once. In the extreme case where all rows are returned, a table scan is much more efficient than seek and lookup. With a scan, SQL Server has to read every data page exactly once, whereas with seek + key lookup, every page will be visited once for each row on the page. The Orders table in Northwind has 830 rows, and when SQL Server estimates that as many as 249 rows will be returned, it (rightly) concludes that the scan is the best choice.

Now we know why the optimizer arrives at different execution plans: because the estimates are different. But that only leads to the next question: why are the estimates different? That is the key topic of this article.

In the first procedure, the date is a constant, which means that the SQL Server only needs to consider exactly this case. It interrogates the statistics for the Orders table, which indicates that there are no rows with an OrderDate in the third millennium. (All orders in the Northwind database are from 1996 to 1998.) Since statistics are statistics, SQL Server cannot be sure that the query will return no rows at all, so it settles for an estimate of one single row.

In the case of List_orders_2, the query is against a variable, or more precisely a parameter. When performing the optimisation, SQL Server knows that the procedure was invoked with the value 2000-01-01. Since it does not perform any flow analysis, it can't say for sure whether the parameter will have this value when the query is executed. Nevertheless, it uses the input value to come up with an estimate, which is the same as for List_orders_1: one single row. This strategy of looking at the values of the input parameters when optimising a stored procedure is known as parameter sniffing.

In the last procedure, it's all different. The input value is copied to a

local variable, but when SQL Server builds the plan, it has no understanding of

this and says to itself I don't know what the value of this variable will

be. Because of this, it applies a standard assumption, which for an inequality

operation such as > is a 30 % hit-rate. 30 % of 830 is indeed 249.

Here is a variation of the theme:

CREATE PROCEDURE List_orders_4 @fromdate datetime = NULL AS

IF @fromdate IS NULL

SELECT @fromdate = '19900101'

SELECT * FROM Orders WHERE OrderDate > @fromdate

In this procedure, the parameter is optional, and if the user does not fill in the parameter, all orders are listed. Say that the user invokes the procedure as:

EXEC List_orders_4

The execution plan is identical to the plan for List_orders_1 and List_orders_2. That is, Index Seek + Key Lookup, despite

that all orders are returned. If you

look at the pop-up for the Index Seek operator, you will see that it is identical to the pop-up for List_orders_2 but in one regard, the actual number of rows.

When compiling the procedure, SQL Server

does not know that the value of @fromdate changes, but compiles the procedure

under the assumption that @fromdate has the value NULL. Since all comparisons with NULL yield

UNKNOWN, the query cannot return any rows at all, if @fromdate still

has this value at run-time. If SQL Server would take the input value as the final

truth, it could construct a plan with only a Constant Scan that does not access the table at all (run the query SELECT * FROM Orders WHERE OrderDate >

NULL to see an example of this). But SQL Server must generate a plan which

returns the correct result no matter what value @fromdate has at run-time. On

the other hand, there is no obligation to build a plan which is the best for all

values. Thus, since the assumption is that

no rows will be returned, SQL Server settles for the Index Seek. (The estimate is still that one row will be returned. This is

because SQL Server never uses an estimate of 0 rows.)

This is an example of when parameter sniffing backfires, and in this particular case it may be better to write the procedure in this way:

CREATE PROCEDURE List_orders_5 @fromdate datetime = NULL AS DECLARE @fromdate_copy datetime SELECT @fromdate_copy = coalesce(@fromdate, '19900101') SELECT * FROM Orders WHERE OrderDate > @fromdate_copy

With List_orders_5 you always get a Clustered Index Scan.

In this section, we have learned three very important things:

And there is a corollary of this: if you take out a query from a stored procedure and replace variables and parameters with constants, you now have quite a different query. More about this later.

Before we move on, a little more about what you can see in modern versions of SSMS. Here we looked at the popups to see the estimates and the actual values. However, if you look in the graphical plan you can see that below the operators it says things like 0 of 249. This means 0 actual rows of 249 estimated. Below is a detail from the plan for List_orders_4. When it says 830 of 1, this means that 830 rows were returned, but that the estimate was one single row.

This does not mean that in modern versions of SSMS you never need to look at the popups. There are more actual and estimated values that are worth looking at. For instance, I often compare Number of Executions with Estimated Number of Executions. But it is certainly handy to have the estimated and actual number of rows present directly in the graphic plan, as a big difference between the two is often part of the answer to why a query is slow, and we will look into this more later in this article.

If SQL Server would compile a stored procedure – that is, optimise and build a query plan – every time the procedure is executed, there is a big risk that SQL Server would crumble from all the CPU resources it would take. I immediately need to qualify this, because it is not true for all systems. In a big data warehouse where a handful of business analysts run complicated queries that take a minute on average to execute, there would be no damage if there was compilation every time – rather it could be beneficial. But in an OLTP database where plenty of users run stored procedures with short and simple queries, this concern is very much for real.

For this reason, SQL Server caches the query plan for a stored procedure, so when the next user runs the procedure, the compilation phase can be skipped, and execution can commence directly. The plan will stay in the cache, until some event forces the plan out of the cache. Examples of such events are:

If such an event occurs, a new query plan will be created the next time the procedure is executed. SQL Server will anew "sniff" the input parameters, and if the parameter values are different this time, the new query plan may be different from the previous plan.

There are other events that do not cause the entire procedure plan to be evicted from the cache, but which trigger recompilation of one or more individual statements in the procedure. The recompilation occurs the next time the statement is executed. This applies even if the event occurred after the procedure started executing. Here are examples of such events:

These lists are by no means exhaustive, but you should observe one thing which is not there: executing the procedure with different values for the input parameters from the original execution. That is, if the second invocation of List_orders_2 is:

EXEC List_orders_2 '19900101'

The execution will still use the index on OrderDate, despite that the query is now retrieving all orders. This leads to a very important observation: the parameter values of the first execution of the procedure have a huge impact for subsequent executions. If this first set of values for some reason is atypical, the cached plan may not be optimal for future executions. This is why parameter sniffing is such a big deal.

Note: for a complete list of what can cause plans to be flushed or statements to be recompiled, see the white paper on Plan Caching listed in the Further Reading section.

There is a plan for the procedure in the cache. That means that everyone can use it, or? No, in this section we will learn that there can be multiple plans for the same procedure in the cache. To understand this, let's consider this contrived example:

CREATE PROCEDURE List_orders_6 AS SELECT * FROM Orders WHERE OrderDate > '12/01/1998' go SET DATEFORMAT dmy go EXEC List_orders_6 go SET DATEFORMAT mdy go EXEC List_orders_6 go

If you run this, you will notice that the first execution returns many orders, whereas the second execution returns no orders. And if you look at the execution plans, you will see that they are different as well. For the first execution, the plan is a Clustered Index Scan (which is the best choice with so many rows returned), whereas the second execution plan uses Index Seek with Key Lookup (which is the best when no rows are returned).

How could this happen? Did SET DATEFORMAT cause recompilation? No, that would not be smart. In this example, the executions come one after each other, but they could just as well be submitted in parallel by different users with different settings for the date format. Keep in mind that the entry for a stored procedure in the plan cache is not tied to a certain session or user, but it is global to all connected users.

Instead the answer is that SQL Server creates a second cache entry for the second execution of the procedure. We can see this if we peek into the plan cache with this query:

SELECT qs.plan_handle, a.attrlist

FROM sys.dm_exec_query_stats qs

CROSS APPLY sys.dm_exec_sql_text(qs.sql_handle) est

CROSS APPLY (SELECT epa.attribute + '=' + convert(nvarchar(127), epa.value) + ' '

FROM sys.dm_exec_plan_attributes(qs.plan_handle) epa

WHERE epa.is_cache_key = 1

ORDER BY epa.attribute

FOR XML PATH('')) AS a(attrlist)

WHERE est.objectid = object_id ('dbo.List_orders_6')

AND est.dbid = db_id('Northwind')

Reminder: You need the server-level permission VIEW SERVER PERFORMANCE STATE to run queries against the plan cache. On versions prior to SQL 2022, you need the permission VIEW SERVER STATE.

The DMV (Dynamic Management View) sys.dm_exec_query_stats has one entry for each query currently in the plan cache. If a procedure has multiple statements, there is one row per statement. Of interest here is sql_handle and plan_handle. I use sql_handle to determine which procedure the cache entry relates to (later we will see examples where we also retrieve the query text) so that we can filter out all other entries in the cache. Most often you use plan_handle to retrieve the query plan itself, and we will see an example of this later, but in this query I access a DMV that returns the attributes of the query plan. More specifically, I return the attributes that are cache keys. When there is more than one entry in the cache for the same procedure, the entries have at least one difference in the cache keys. A cache key is a run-time setting, which for one reason or another calls for a different query plan. Most of these settings are controlled with a SET command, but not all.

The query above returns two rows, indicating that there are two entries for the procedure in the cache. The output may look like this:

plan_handle attrlist

------------------------------- -------------------------------------------------

0x0500070064EFCA5DB8A0A90500... compat_level=150 date_first=7 date_format=1

set_options=4347 user_id=1

0x0500070064EFCA5DB8A0A80500... compat_level=150 date_first=7 date_format=2

set_options=4347 user_id=1

To save space, I have abbreviated the plan handles and deleted many of the values in the attrlist column. I have also folded that column into two lines. If you run the query yourself, you can see the complete list of cache keys, and there are quite a few of them. If you look up the topic for sys.dm_exec_plan_attributes in Books Online, you will see descriptions for many of the plan attributes, but you will also note that far from all cache keys are documented. In this article, I will not dive into all cache keys, not even the documented ones, but focus only on the most important ones.

As I said, the example is contrived, but it gives a good illustration to why the query plans must be different: different date formats may yield different results. A somewhat more normal example is this:

EXEC sp_recompile List_orders_2 go SET DATEFORMAT dmy go EXEC List_orders_2 '12/01/1998' go SET DATEFORMAT mdy go EXEC List_orders_2 '12/01/1998' go

(The initial sp_recompile is to make sure that the plan from the previous example is flushed.) This example yields the same results and the same plans as with List_orders_6 above. That is, the two query plans use the actual parameter value when the respective plan is built. The first query uses 12 Jan 1998, and the second 1 Dec 1998.

A very important cache key is set_options. This is a bit mask that gives the setting of a number of SET options that can be ON or OFF. If you look further in the topic of sys.dm_exec_plan_attributes, you find a listing that details which SET option each bit describes. (You will also see that there are a few more items that are not controlled by the SET command.) Thus, if two connections have any of these options set differently, the connections will use different cache entries for the same procedure – and therefore they could be using different query plans, with possibly big difference in performance.

One way to translate the set_options attribute is to run this query:

SELECT convert(binary(4), 4347)

This tells us that the hex value for 4347 is 0x10FB. Then we can look in Books Online and follow the table to find out that the following SET options are in force: ANSI_PADDING, Parallel Plan, CONCAT_NULL_YIELDS_NULL, ANSI_WARNINGS, ANSI_NULLS, QUOTED_IDENTIFIER, ANSI_NULL_DFLT_ON and ARITHABORT.

You can also use this table-valued function that I have written and run:

SELECT Set_option FROM setoptions (4347) ORDER BY Set_option

Note: You may be wondering what Parallel Plan is doing here, not the least since the plan in the example is not parallel. When SQL Server builds a parallel plan for a query, it may later also build a non-parallel plan if the CPU load in the server is such that it is not defensible to run a parallel plan. It seems that for a plan that is always serial that the bit for parallel plan is nevertheless set in set_options.

To simplify the discussion, we can say that each of these SET options – ANSI_PADDING, ANSI_NULLS etc – is a cache key on its own. The fact that they are added together in a singular numeric value is just a matter of packaging.

About all of the SET ON/OFF options that are cache keys exist because of legacy reasons. Originally, in the dim and distant past, SQL Server included a number of behaviours that violated the ANSI standard for SQL. With SQL Server 6.5, Microsoft introduced all these SET options (save for ARITHABORT, which was in the product already in 4.x), to permit users to use SQL Server in an ANSI-compliant way. In SQL 6.5, you had to use the SET options explicitly to get ANSI compliance, but with SQL 7, Microsoft changed the defaults for clients that used the new versions of the ODBC and OLE DB APIs. The SET options still remained to provide backwards compatibility for older clients.

Note: In case you are curious what effect these SET options have, I refer you to Books Online. Some of them are fairly straight-forward to explain, whereas others are just too confusing. To understand this article, you only need to understand that they exist, and what impact they have on the plan cache.

Alas, Microsoft did not change the defaults with full consistency, and even today the defaults depend on how you connect, as detailed in the table below.

| Applications using ADO .Net, ODBC or OLE DB |

SSMS | SQLCMD, OSQL, BCP, SQL Server Agent |

DB-Library (very old) | |

|---|---|---|---|---|

| ANSI_NULL_DFLT_ON | ON | ON | ON | OFF |

| ANSI_NULLS | ON | ON | ON | OFF |

| ANSI_PADDING | ON | ON | ON | OFF |

| ANSI_WARNINGS | ON | ON | ON | OFF |

| CONCAT_NULL_YIELDS_NULL | ON | ON | ON | OFF |

| QUOTED_IDENTIFIER | ON | ON | OFF | OFF |

| ARITHABORT | OFF | ON | OFF | OFF |

You might see where this is getting at. Your application connects with ARITHABORT OFF, but when you run the query in SSMS, ARITHABORT is ON and thus you will not reuse the cache entry that the application uses, but SQL Server will compile the procedure anew, sniffing your current parameter values, and you may get a different plan than from the application. So there you have a likely answer to the initial question of this article. There are a few more possibilities that we will look into in the next chapter, but by far the most common reason for slow in the application, fast in SSMS is parameter sniffing and the different defaults for ARITHABORT. (If that was all you wanted to know, you can stop reading. If you want to fix your performance problem – hang on! And, no, putting SET ARITHABORT ON in the procedure is not the solution.)

Besides the SET command and the defaults above, ALTER DATABASE permits you to say that a certain SET option always should be ON by default in a database and thus override the default set by the API. These options are intended for very old applications running DB-Library as listed in the right-most column above. While the syntax may indicate so, you cannot specify that an option should be OFF this way. Also, beware that if you test these options from Management Studio, they may not seem to work, since SSMS submits explicit SET commands, overriding any default. There is also a server-level setting for the same purpose, the configuration option user options which is a bit mask. You can set the individual bits in the mask from the Connection pages of the Server Properties in Management Studio. Overall, I recommend against controlling the defaults this way, as in my opinion they mainly serve to increase the confusion.

It is not always the run-time setting of an option that applies. When you create a procedure, view, table etc, the settings for ANSI_NULLS and QUOTED_IDENTIFIER, are saved with the object. That is, if you run this:

SET ANSI_NULLS, QUOTED_IDENTIFIER OFF go CREATE PROCEDURE stupid @x int AS IF @x = NULL PRINT "@x is NULL" go SET ANSI_NULLS, QUOTED_IDENTIFIER ON go EXEC stupid NULL

It will print

@x is NULL

(When QUOTED_IDENTIFIER is OFF, double quote (") is a string delimiter on equal basis with single quote ('). When the

setting is ON, double quotes delimit identifiers in the same way that square

brackets ([]) do and the PRINT statement would yield a compilation

error.)

In addition, the setting for ANSI_PADDING is saved per table column where it is applicable, that is, the data types varchar and varbinary.

All these options and different defaults are certainly confusing, but here

are some pieces of advice. First, remember that the first six of these seven

options exist only to

supply backwards compatibility, so there is little reason why you should ever

have any of them OFF. Yes, there are situations when some of them may seem to buy a little more

convenience if they are OFF, but don't fall for that temptation. One complication here, though,

is that the SQL Server tools spew out SET commands for some of these options

when you script objects. Thankfully, they mainly produce SET ON commands that

are harmless. (But when you script a table, scripts may have a SET ANSI_PADDING OFF at the end. You can control this under Tools->Options->Scripting where you can set Script ANSI_PADDING commands to False, which I recommend.)

Next, when it comes to ARITHABORT, you should know that in SQL 2005 and later versions, this setting has zero impact as long as ANSI_WARNINGS is ON. (To be precise: it has no impact as long as the compatibility level is 90 or higher.) Thus, there is no reason to turn it on for the sake of the matter. And when it comes to SQL Server Management Studio, you might want do yourself a favour, and open this dialog and uncheck SET ARITHABORT as highlighted:

This will change your default setting for ARITHABORT when you connect with SSMS. It will not help you to make your application run faster, but you will at least not have to be perplexed by getting different performance in SQL Server Management Studio.

Note: You may note that on this page I have checked SET XACT_ABORT ON, which is unchecked by default. This setting is not a cache key and has no effect on performance. However, it has an effect on what happens in case of an execution error, which I discuss in my article Error and Transaction in SQL Server. While unrelated to this article, I absolutely recommend you to have this setting checked in SSMS.

For reference, below is how the ANSI page should look like. A very strong recommendation: never change anything on this page!

When it comes to SQLCMD and OSQL, make the habit to always use the

-I option, which causes these tools to run with QUOTED_IDENTIFIER

ON. The corresponding option for BCP is -q. (To confuse, -q has one more effect for BCP which I discuss in my article Using the Bulk-Load Tools in SQL Server.) It's a little more difficult in Agent, since there is no way to change the default for Agent – at least I have not found any. Then again, if you only run stored procedures from your job steps, this is not an issue, since the saved setting for stored procedures takes precedence. But if you would run loose batches of SQL from Agent jobs, you could face the problem with different query plans in the job and SSMS because of the different defaults for QUOTED_IDENTIFER. For such jobs, you should always include the command SET QUOTED_IDENTIFIER ON as the first command in the job step.

We have already looked at SET DATEFORMAT, and there are two more options in that group: LANGUAGE and DATEFIRST. The default language is configured per user, and there is a server-wide configuration option which controls what is the default language for new users. The default language controls the default for the other two. Since they are cache keys, this means that two users with different default languages will have different cache entries, and may thus have different query plans.

My recommendation is that you should try to avoid being dependent on language and date settings in SQL Server altogether. For instance, in as far as you use date literals at all, use a format that is always interpreted the same, such as YYYYMMDD. (For more details about date formats, see the article The ultimate guide to the datetime datatypes by SQL Server MVP Tibor Karaszi.) If you want to produce localised output from a stored procedure depending on the user's preferred language, it may be better to roll your own than rely on the language setting in SQL Server.

To get a complete picture of how SQL Server builds the query plan, we need to study what happens when individual statements are recompiled. Above, I mentioned a few situations where it can happen, but at that point I did not go into details.

The procedure below is certainly contrived, but it serves well to demonstrate what happens.

CREATE PROCEDURE List_orders_7 @fromdate datetime,

@ix bit AS

SELECT @fromdate = dateadd(YEAR, 2, @fromdate)

SELECT * FROM Orders WHERE OrderDate > @fromdate

IF @ix = 1 CREATE INDEX test ON Orders(ShipVia)

SELECT * FROM Orders WHERE OrderDate > @fromdate

go

EXEC List_orders_7 '19980101', 1

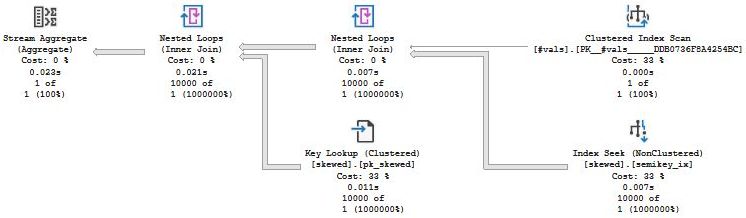

When you run this and look at the actual execution plan, you will see that the plan for the first SELECT is a Clustered Index Scan, which agrees with what we have learnt this far. SQL Server sniffs the value 1998-01-01 and estimates that the query will return 267 rows which is too many to read with Index Seek + Key Lookup. What SQL Server does not know is that the value of @fromdate changes before the queries are executed. Nevertheless, the plan for the second, identical, query is precisely Index Seek + Key Lookup and the estimate is that one row will be returned. This is because the CREATE INDEX statement sets a mark that the schema of the Orders table has changed, which triggers a recompile of the second SELECT statement. When recompiling the statement, SQL Server sniffs the value of the parameter which is current at this point, and thus finds the better plan.

Run the procedure again, but with different parameters (note that the date is two years earlier in time):

EXEC List_orders_7 '19960101', 0

The plans are the same as in the first execution, which is a little more exciting than it may seem at first glance. On this second execution, the first query is recompiled because of the added index, but this time the scan is the "correct" plan, since we retrieve about one third of the orders. However, since the second query is not recompiled now, the second query runs with the Index Seek from the previous execution, although now it is not an efficient plan.

Before you continue, clean up:

DROP INDEX test ON Orders DROP PROCEDURE List_orders_7

As I said, this example is contrived. I made it that way, because I wanted a compact example that is simple to run. In a real-life situation, you may have a procedure that uses the same parameter in two queries against different tables. The DBA creates a new index on one of the tables, which causes the query against that table to be recompiled, whereas the other query is not. The key takeaway here is that the plans for two statements in a procedure may have been compiled for different "sniffed" parameter values.

When we have seen this, it seems logical that this could be extended to local variables as well. But this is not the case:

CREATE PROCEDURE List_orders_8 AS DECLARE @fromdate datetime SELECT @fromdate = '20000101' SELECT * FROM Orders WHERE OrderDate > @fromdate CREATE INDEX test ON Orders(ShipVia) SELECT * FROM Orders WHERE OrderDate > @fromdate DROP INDEX test ON Orders go EXEC List_orders_8

In this example, we get a Clustered Index Scan for both SELECT statements, despite that the second SELECT is recompiled during execution and the value of @fromdate is known at this point.

Note: if you add the hint OPTION (RECOMPILE), it is different. In this case, the values of local variables are considered. We will look more at this hint later in this article.

So far I have talked about scalar parameters and variables. Let's now turn to table variables, where things work differently, and there is also a difference between different versions of SQL Server. Consider this script:

ALTER DATABASE Northwind SET COMPATIBILITY_LEVEL = 140

go

CREATE PROCEDURE List_orders_9 AS

DECLARE @ids TABLE (a int NOT NULL PRIMARY KEY)

INSERT @ids (a)

SELECT OrderID FROM Orders

SELECT COUNT(*)

FROM Orders O

WHERE EXISTS (SELECT *

FROM @ids i

WHERE O.OrderID = i.a)

CREATE INDEX test ON Orders(ShipVia)

SELECT COUNT(*)

FROM Orders O

WHERE EXISTS (SELECT *

FROM @ids i

WHERE O.OrderID = i.a)

DROP INDEX test ON Orders

go

EXEC List_orders_9

go

DROP PROCEDURE List_orders_9

Note that the first statement will fail if you are on SQL 2016 or earlier. In such case, just ignore the error, but you will not be able to run the second part of this lab.

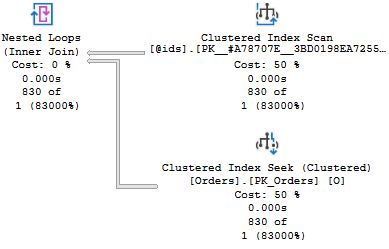

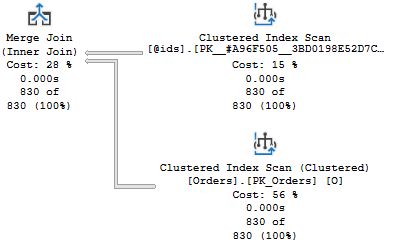

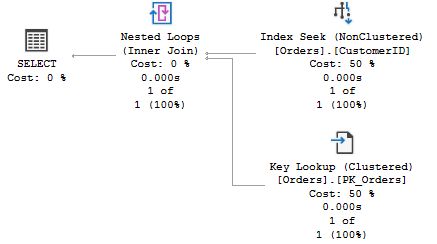

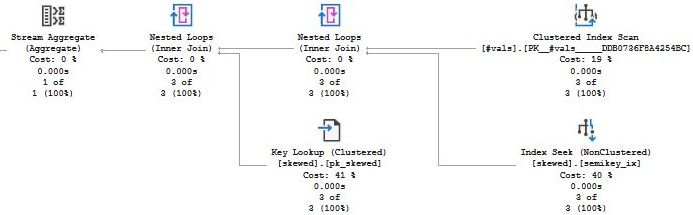

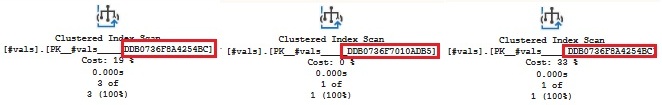

When you run this, you will get in total four execution plans. The two of interest are the second and fourth plans that come from the two identical SELECT COUNT(*) queries. I have included the interesting parts of the plans here:

In the first plan, the one to the left, SQL Server estimates that there is one row in the table variable (recall that when it says "830 of 1", this means 830 actual and 1 estimated), and as a consequence of that estimate, the optimizer settles for a Nested Loops Join operator together with a Clustered Index Seek on the Orders table. This is a poor choice in this case, since all rows are returned. But @ids is a local variable, and SQL Server has no knowledge of how many rows there are in the table when the procedure is compiled initially. The creation of an index triggers a recompile of the second SELECT statement before it is executed, and in contrast to a local scalar variable, SQL Server "sniffs" the cardinality of @ids, and you can see in the screen shot to the right that the estimate is now 830, and this leads to a better plan choice with a Merge Join.

If you are on SQL 2019, change 140 to 150 on the first line above and re-run. What you will find is that now the first execution also has a correct estimate for @ids, and the plan is a Merge Join. This is because of enhancement in SQL 2019. It is a common pattern to declare a table variable, populate it with many rows and then use it in a query. This often leads to poor performance, because the plan is optimised for one row in the table variable when there are many. For this reason, Microsoft introduced deferred compilation for statements that refer to table variables. That is, if the compatibility level is 150 or higher, SQL Server does not compile any query plan for the two SELECT statements when the procedure starts, but defers this until execution reaches those statements. The plan that is created at this point is put into cache and reused on further executions.

Both these behaviours, the original behaviour with sniffing the local variable on statement recompile, and the deferred compilation introduced in SQL 2019 are generally beneficiary. However, there is a risk that you can run into issues akin to parameter sniffing. Say that on the first execution there are 2000 rows in the table variable, but on subsequent executions there are only three to five rows. These latter executions will then run with a plan optimised for 2000 rows, which may not be the best for these smaller executions.

Note: It is possible to disable deferred compilation of statements with table variables, either for a specific query with a query hint or on database level with a database-scoped configuration. Observe that these settings do not apply to sniffing the cardinality of a table variable when a statement is recompiled.

Finally, let's look at table-valued parameters. For these you will never get any blind assumption of one row, but SQL Server will sniff the cardinality of the parameter when it performs the initial compilation of the procedure. Here is an example:

CREATE TYPE temptype AS TABLE (a int NOT NULL PRIMARY KEY)

go

CREATE PROCEDURE List_orders_10 @ids temptype READONLY AS

SELECT COUNT(*)

FROM Orders O

WHERE EXISTS (SELECT *

FROM @ids i

WHERE O.OrderID = i.a)

go

DECLARE @ids temptype

INSERT @ids (a)

SELECT OrderID FROM Orders

EXEC List_orders_10 @ids

go

DECLARE @ids temptype

INSERT @ids (a) VALUES(11000)

EXEC List_orders_10 @ids

go

DROP PROCEDURE List_orders_10

DROP TYPE temptype

The query plan for this procedure is the same as for the second SELECT query in List_orders_9, that is Merge Join + Clustered Index Scan of Orders, since SQL Server sees the 830 rows in @ids when the query is compiled. The execution plan is the same for the second execution of List_orders_10, although this time the execution plan is not optimal. We know why this happens: SQL Server reuses the cached execution plan.

In this chapter, we have looked at how SQL Server compiles a stored procedure and what significance the actual parameter values have for compilation. We have seen that SQL Server puts the plan for the procedure into cache, so that the plan can be reused later. We have also seen that there can be more than one entry for the same stored procedure in the cache. We have seen that there is a large number of different cache keys, so potentially there can be very many plans for a single stored procedure. But we have also learnt that many of the SET options that are cache keys are legacy options that you should never change.

In practice, the most important SET option is ARITHABORT, because the default for this option is different in an application and in SQL Server Management Studio. This explains why you can spot a slow query in your application, and then run it at good speed in SSMS. The application uses a plan which was compiled for a different set of sniffed parameter values than the actual values, whereas when you run the query in SSMS, it is likely that there is no plan for ARITHABORT ON in the cache, so SQL Server will build a plan that fits with your current parameter values.

You have also understood that you can verify that this is the case by running this command in your query window:

SET ARITHABORT OFF

and with great likelihood, you will now get the slow behaviour of the application also in SSMS. If this happens, you know that you have a performance problem related to parameter sniffing. What you may not know yet is how to address this performance problem, and in the following chapters I will discuss possible solutions, before I return to the theme of compilation, this time for ad-hoc queries, a.k.a. dynamic SQL. In the last appendix, I will discuss a quite special situation reminiscent of parameter sniffing related to temp-table caching.

Note: There are always these funny variations. An application I worked with for many years actually issued SET ARITHABORT ON when it connected, so we should never have seen this confusing behaviour in SSMS. Except that we did. An older component of the application also issued the command SET NO_BROWSETABLE ON on connection. I have never been able to understand the impact of this undocumented SET command, but I seem to recall that it is related to early versions of "classic" ADO. And, yes, this setting is a cache key.

Before we delve into how to address performance problems related to parameter sniffing, which is quite a broad topic, I would first like to give some coverage to a couple of cases where parameter sniffing is not involved, but where you nevertheless may experience different performance in the application and SSMS.

I have already touched on this, but it is worth expanding on a bit.

Occasionally, I see people in the forums telling me that their stored procedure is slow, but when they run the same query outside of the procedure it's fast. After a few posts in the thread, the truth is revealed: The query they are struggling with refers to variables, be that local variables or parameters. To troubleshoot the query on its own, they have replaced the variables with constants. But as we have seen, the resulting stand-alone query is quite different, and SQL Server can make more accurate estimates with constants instead of variables, and therefore it arrives at a better plan. Furthermore, SQL Server does not have to consider that the constant may have a different value next time the query is executed.

A similar mistake is to make the parameters into variables. Say that you have:

CREATE PROCEDURE some_sp @par1 int AS ... -- Some query that refers to @par1

You want to troubleshoot this query on its own, so you do:

DECLARE @par1 int SELECT @par1 = 4711 -- query goes here

From what you have learnt here, you know that this is very different from when @par1 really is a parameter. SQL Server has no idea about the value for @par1 when you declare it as a local variable and will make standard assumptions.

But if you have a 1000-line stored procedure, and one query is slow, how do you run it stand-alone with great fidelity, so that you have the same presumptions as in the stored procedure?

One way to tackle this is to embed the query in sp_executesql:

EXEC sp_executesql N'-- Some query that refers to @par1', N'@par1 int', 4711

You will need to double any single quotes in the query to be able to put it in a character literal. If the query refers to local variables, you should assign them in the block of dynamic SQL and not pass them as parameters so that you have the same presumptions as in the stored procedure.

Another option is to create a dummy procedure with the problematic statement; this saves from doubling any quotes. To avoid litter in the database, you could create a temporary stored procedure:

CREATE PROCEDURE #test @par1 int AS -- query goes here.

As with dynamic SQL, make sure that local variables are locally declared also in your dummy. I will need to add the caveat I have not investigated whether SQL Server has special tweaks or limitations when optimising temporary stored procedures. Not that I see why there should be any, but I have been burnt before...

You should not forget that one possible reason that the procedure ran slow in the application was simply a matter of blocking. When you tested the query three hours later in SSMS, the blocker had completed its work. If you find that no matter how you run the procedure in SSMS, with or without ARITHABORT, the procedure is always fast, blocking is starting to seem a likely explanation. Next time you are alarmed that the procedure is slow, you should start your investigation with some blocking analysis. That is a topic which is completely outside the scope for this article, but for a good tool to investigate locking, see my beta_lockinfo.

Let's say that you run a query like this in two different databases:

SELECT ... FROM dbo.sometable JOIN dbo.someothertable ON ... JOIN dbo.yetanothertable ON ... WHERE ...

You find that the query performs very differently in the two databases. There can be a whole lot of reasons for these differences. Maybe the data sizes are entirely different in one or more of the tables, or the data is distributed differently. It could be that the statistics are different, even if the data is identical. It could also be that one database has an index that the other database has not.

Say that the query instead goes:

SELECT ... FROM thatdb.dbo.sometable JOIN thatdb.dbo.someothertable ON ... JOIN thatdb.dbo.yetanothertable ON ... WHERE ...

That is, the query refers to the tables in three-part notation. And yet you find that the query runs faster in SSMS than in the application or vice versa. But when you get the idea to change the database in SSMS to be the same as in the application, you get slow performance in SSMS as well. What is going on? It can certainly not be anything of what I discussed above, since the tables are the same, no matter the database you issue the query from.

The answer is that it may be parameter sniffing, because if you look at the output of the query I introduced in the section Different Plans for Different Settings, you will see that dbid is one of the cache keys. That is, if you run the same query against the same tables from two different databases, you get different cache entries, and thus there can be different plans. And, as we have learnt, one of the possible reasons for this is parameter sniffing. But it could also be that the settings for the two databases are different:

Thus, if this occurs to you, run this query:

SELECT * FROM sys.databases WHERE name IN ('slowdb', 'fastdb')

(Obviously, you should replace slowdb and fastdb with the names of the actual databases you ran from.) Compare the rows, and make note of the differences. Far from all the columns you see affect the plan choice. The by far most important one is the compatibility level. When Microsoft makes enhancements to the optimizer in a new version of SQL Server, they only make these enhancements available in the latest compatibility level, because although they are intended to be enhancements, there will always be queries that will be negatively affected by the change and run a lot slower.

If you are on SQL 2016 or later, you also need to look in sys.database_scoped_configurations and compare the settings between the two databases. (This view does not hold the database id or the name, but each database has its own version.) Quite a few of these settings affect the work of the optimizer, which could lead to different plans.

What you would do once you have identified the difference depends on the situation. But generally, I recommend against changing away from the defaults. (Starting with SQL 2017, sys.database_scoped_configurations has a column is_value_default which is 1, if the current setting is the default setting.)

For instance, if you find that the slow plan comes from a database with a lower compatibility level, consider changing the compatibility level for that database. But if the slow plan occurs with the database with the higher compatibility level, you should not change the setting, but rather work with the query and available indexes to see what can be done. In my experience, when a query regresses when going to a newer version of the optimizer, there is usually something that is problematic, and you were only lucky that the query ran fast on the earlier version.

There is one quite obvious exception to the rule of sticking with the defaults: if you find that in the fast database, the database-scoped configuration QUERY_OPTIMIZER_HOTFIXES is set to 1, you should consider to enable this setting for the other database as well, as this will give you access to optimizer fixes released after the original release of the SQL Server version you are using.

Just to make it clear: the database settings do not only apply to queries with tables in three-part notation, but they can also explain why the same query or stored procedure with tables in one- or two-part notation gets different plans and performance in seemingly similar databases.

You have a query in a stored procedure that is slow. But when you put the query in a temporary procedure like I discussed above, it's fast. When you compare the query plans, you find that the fast version uses an indexed view, an index on a computed column, or a filtered index, but the slow procedure does not.

For the optimizer to consider any of these types of indexes, these settings must be ON: QUOTED_IDENTIFIER, ANSI_NULLS, ANSI_WARNINGS, ANSI_PADDING, and CONCAT_NULL_YIELDS_NULL. Furthermore, NUMERIC_ROUNDABORT must be OFF. Of these settings, QUOTED_IDENTIFIER and ANSI_NULLS are saved with the procedure. So in the scenario I described in the previous paragraph, a likely cause is that the stored procedure was created with QUOTED_IDENTIFIER and/or ANSI_NULLS set to OFF. You can investigate the stored settings for your procedure with this query:

SELECT objectpropertyex(object_id('your_sp'), 'IsQuotedIdentOn'),

objectpropertyex(object_id('your_sp'), 'IsAnsiNullsOn')

If you see 0 in any of these columns, make sure that the procedure is reloaded with the proper settings.

There are two reasons related to SQL tools that can cause this to happen. First, as I noticed earlier, SQLCMD by default connects with SET QUOTED_IDENTIFIER OFF, so if you deploy procedures with SQLCMD this can happen. Use the ‑I option to force QUOTED_IDENTIFIER ON! The other reason is something you would mainly encounter in a database that started its life in SQL 2000 or earlier. SQL 2000 came with a tool Enterprise Manager which always emitted SET ANSI_NULLS OFF and SET QUOTED_IDENTIFIER OFF before you created a stored procedure, and this was nothing you could configure. Later, when the database started to be maintained with SSMS, SSMS faithfully scripted these settings, and unless someone manually changed them they were retained through the years.

You can use this query to find all procedures with bad settings in your database:

SELECT o.name

FROM sys.sql_modules m

JOIN sys.objects o ON m.object_id = o.object_id

WHERE (m.uses_quoted_identifier = 0 or

m.uses_ansi_nulls = 0)

AND o.type NOT IN ('R', 'D')

Normally, problems related to indexed views and similar are due to stored settings, but obviously, if the application would meddle with any of the other four SET options, for instance, submit SET ANSI_WARNINGS OFF when connecting, this would also disqualify these types of indexes from being used, and thus be a reason for slow in the application, fast in SSMS.

Finally, if you have a database with compatibility level 80 (which is not supported on SQL 2012 and later), you should know that there is one more setting which must be ON for these indexes to be considered, and that is our favourite, ARITHABORT.

This section concerns an issue with linked servers which mainly occurs when the remote server is earlier than SQL 2012 SP1, but it can appear with later versions as well under some circumstances.

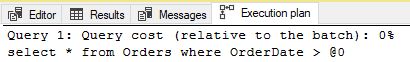

Consider this query:

SELECT C.* FROM SQL_2008.Northwind.dbo.Orders O JOIN Customers C ON O.CustomerID = C.CustomerID WHERE O.OrderID > 20000

I ran this query twice, logged in as two different users. The first user is sysadmin on both servers, whereas the second user is a plain user with only SELECT permissions. To ensure that I would get different cache entries, I used different settings for ARITHABORT.

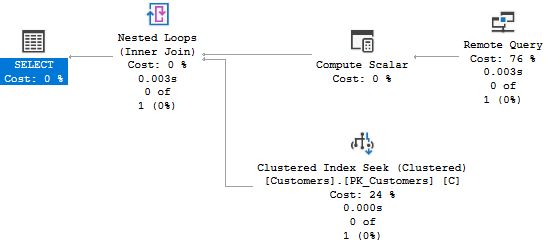

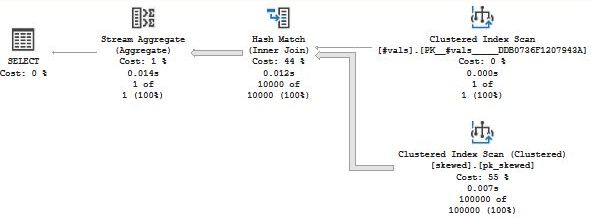

When I ran the query as sysadmin, I got this plan:

When I ran the query as the plain user, the plan was different:

How come the plans are different? It's certainly not parameter sniffing because there are no parameters. As always when a query plan has an unexpected shape or operator, it is a good idea to look at the estimates, and if you look at the numbers below the Remote Query operators, you can see that the estimates are different. When I ran as sysadmin, the estimate was 1 row, which is a correct number, since there are no orders in Northwind where the order ID exceeds 20000. (Recall that the optimizer never assumes zero rows from statistics.) But when I ran the query as a plain user, the estimate was 249 rows. We recognize this particular number as 30 % of 830 orders, or the estimate for an inequality operation when the optimizer has no information. Previously, this was due to an unknown variable value, but in this case there is no variable that can be unknown. No, it is the statistics themselves that are missing.

As long as a query accesses tables in the local server only, the optimizer can always access the statistics for all tables in the query. This happens internally in SQL Server and there are no extra checks to see whether the user has permission to see the statistics. But this is different with tables on linked servers. When SQL Server accesses a linked server, the optimizer needs to retrieve the statistics on the same connection that is used to retrieve the data, and it needs to use T‑SQL commands which is why permissions come into play. And, unless login mapping has been set up, those are the permissions of the user running the query.

By using Profiler or Extended Events you can see that the optimizer retrieves the statistics in two steps. First it calls the procedure sp_table_statistics2_rowset which returns information about which column statistics there are, as well as the cardinality and density information of the columns. In the second step, it runs DBCC SHOW_STATISTICS to get the full distribution statistics. (We will look closer at this command later in this article.)

Note: to be accurate, the optimizer does not talk to the linked server at all, but it interacts with the OLE DB provider for the remote data source and requests the provider to return the information the optimizer needs.

For sp_table_statistics2_rowset to run successfully the user must have the permission VIEW DEFINITION on the table. This permission is implied if the user has SELECT permission on the table (without which the user would not be able to run the query at all). So, unless the user has been explicitly denied VIEW DEFINITION, this procedure is not so much a concern.

DBCC SHOW_STATISTICS is a different matter. For a long time, running this command required membership in the server role sysadmin or in one of the database roles db_owner or db_ddladmin. This was changed in SQL 2012 SP1, so starting with this version, only SELECT permission is needed.

And this is why I got different results. As you can tell from the server name, my linked server was an instance running SQL 2008. Thus, when I was connected as a user that was sysadmin on the remote instance, I got the full distribution statistics which indicated that there are no rows with order ID > 20000, and the estimate was one row. But when running as the plain user, DBCC SHOW_STATISTICS failed with a permission error. This error was not propagated, but instead the optimizer accepted that there were no statistics and used default assumptions. Since it did get cardinality information from sp_table_statistics2_rowset, it learnt that the remote table has 830 rows, hence the estimate of 249 rows.

From what I said above, this should not be an issue if the linked server runs SQL 2012 SP1 or later, but there can still be obstacles. If the user does not have SELECT permission on all columns in the table, there may be statistics the optimizer is not able to retrieve. Furthermore, according to Books Online, there is a trace flag (9485) which permits the DBA to prevent SELECT permission to be sufficient for running DBCC SHOW_STATISTICS. And more importantly, if row-level security (a feature added in SQL 2016) has been set up for the table, only having SELECT permission is not sufficient as this could permit users to see data they should not have access to. That is, to run DBCC SHOW_STATISTICS on a table with row-level filtering enabled, you need membership in sysadmin, db_owner or db_ddladmin. (Interesting enough, one would expect the same to apply if the table has Dynamic Data Masking enabled, but that does seem to be the case.)

Thus, when you encounter a performance problem where a query that accesses a linked server is slow in the application, but it runs fast when you test it from SSMS (where you presumably are connected as a power user), you should always investigate the permissions on the remote database. (Keep in mind that the access to the linked server may not be overt in the query, but could be hidden in a view.)

If you determine that permissions on the remote database is the problem, what actions could you take? Granting users more permissions on the remote server is of course an easy way out, but absolutely not recommendable from a security perspective. Another alternative is to set up login-mapping so that users log on the remote server with a proxy user with sufficient powers. Again, this is highly questionable from a security perspective.

Rather you would need to tweak the query. For instance, you can rewrite the query with OPENQUERY to force evaluation on the remote server. This can be particularly useful, if the query includes several remote tables, since for the query that runs on the remote server, the remote optimizer has full access to the statistics on that server. (But it can also backfire, because the local optimizer now gets even less statistics information from the remote server.) You can also use the full battery of hints and plan guides to get the plan you want.

I would also recommend that you ask yourself (and the people around you): is that linked-server access needed? Maybe the databases could be on the same server? Could data be replicated? Some other solution? Personally, I try to avoid linked servers as much as possible. In my experience, linked servers often mean hassle.

Before I close this section, I like to repeat: what matters is the permissions on the remote server, not the local server where the query is issued. I also like to point out that I have given a blind eye to what may happen with other remote data sources such as Oracle, MySQL or Access as they have different permission systems of which I'm entirely ignorant. You may or may not see similar issues when running queries against such linked servers.

I got this mail from a reader:

We recently found a SQL Server 2016 select query against CCIs that was slow in the application, and fast in SSMS. Application execution time was 3 to 4 times that of SSMS – looking a bit deeper it was seen that the ratio of CPU time was similar. This held true whether the query was executed in parallel as typical or in serial by forcing MAXDOP 1. An unusual facet was that Query Store was accounting the executions against the same row in sys.query_store_query and sys.query_store_plan, whether from the app or from SSMS.

The application was using a connection string enabling MARS, even though it didn’t need to. Removing that clause from the connection string resulted in SSMS and the application experiencing the same CPU time and elapsed time.

We’ll keep looking to determine the mechanics of the difference. I suspect it may be due to locking behavior difference – perhaps MARS disables lock escalation.

Note: MARS = Multiple Active Result Sets. If you set this property on a connection string, you can run multiple queries on the same connection in an interleaved fashion. It's mainly intended to permit you to submit UPDATE statements as you are iterating through a result set.

The observations from Query Store make it quite clear to me that the execution plan was the same in both cases. Thus, it cannot be a matter of parameter sniffing, different SET options or similar. Interestingly enough, some time later after I had added this section to the article, I got a mail from a second reader who had experienced the same thing. That is, access to a clustered columnstore index was significantly slower with MARS enabled.

This kept me puzzled for a while, and there was too little information to make it possible for me to make an attempt to reproduce the issue. But then I got a third mail on this theme, and Nick Smith was kind to provide a simple example query:

SELECT TOP 10000 SiteId FROM MyTable

That is, it is simply a matter of a query that returns many rows. Note that in this case there was no columnstore index involved, but just a plain-vanilla table. I built a small C# program on this theme and measured execution time with and without MARS. At first, I could not discern any difference at all, but I was running against my local instance. Once I targeted my database in Azure it was a difference of a factor ten. When I connected to a server on the other side of town the difference was a factor of three or four.

It seems quite clear to me that it is a matter of network latency. I assume that the interleaved nature of MARS introduces chattiness on the wire, so the slower and longer the network connection is, the more that chattiness is going to affect you.

Thus, if you find that a query that returns a lot of data runs slow in the application and a lot faster in SSMS, there is all reason to see whether the application specifies MultipleActiveResultSets=true in the connection string. If it does, ask yourself if you need it, and if not take it out.

Could MARS make an application go slower for some other reason? I see no reason to believe so, but it is still a little telling that my first two correspondents mentioned columnstore indexes. Also, the network latency does not really explain the difference in CPU mentioned in the quote above. So, if you encounter a situation where you conclude that MARS slows things down and that there is no network latency, I would be very interested in hearing from you.

This is something Daniel Lopez Atan ran into. He was investigating a procedure that was running slow from the application, but when he tried it in SSMS it was fast. He tried all the tricks in this article, but nothing seemed to fit. But then he noticed that the application ran the procedure inside a transaction. So he tried wrapping the procedure in BEGIN and COMMIT TRANSACTION when he called it from SSMS – and now it was slow from SSMS as well.

This is nothing you will see every time there is a transaction involved, but you may see it with code that runs loops to update data one row at a time (or for one customer at a time or whatever). And it can cut both ways. That is, you may find that you wrap this sort of a procedure in a transaction, it actually runs faster. Confusing, eh? Don't worry, there is a pattern.

Say that you have a loop without any transaction at all where you insert one row at a time. Since every INSERT is its own transaction, SQL Server must wait until the transaction has been hardened, until it can move on. On the other hand, if there is a user-defined transaction around the whole thing, SQL Server can continue directly, fully knowing that if something fails before commit, everything will be rolled back. Thus, the loop now runs quite a bit faster.

However, for reasons I have not fully grasped, if the uncommitted transaction becomes too large, the overhead for a write starts to grow, and eventually it takes considerably longer time to insert a single row, than if there had been no transaction at all, and this is what happened in Daniel's case. I have seen this myself a few times.

So if your performance issue includes loops, you should definitely investigate whether transactions are being used. If they are not, you should consider wrapping the loop in a transaction, possibly with a commit after every thousand rows or so. And vice versa, if it is all one long transaction and it runs faster without, remove the transaction – or even better, keep it, but with commit after every thousand rows. Keep in mind that in either case, you need to understand the business requirements. They may mandate all or nothing, in which case it must remain a single transaction. Or they may mandate that a single bad row must not result in other rows being lost, which rules out the use of transactions at all.

We have learnt how it may come that you have a stored procedure that runs slow in the application, and yet the very same call runs fast when you try it in SQL Server Management Studio: Because of different settings of ARITHABORT you get different cache entries, and since SQL Server employs parameter sniffing, you may get different execution plans.

While the secret behind the mystery now has been unveiled, the main problem still remains: how do you address the performance problem? From what you have read this far, you already know of a quick fix. If you have never seen the problem before and/or the situation is urgent, you can always do:

EXEC sp_recompile problem_sp

As we have seen, this will flush the procedure from the plan cache, and next time it is invoked, there will be a new query plan. And if the problem never comes back, consider the case closed.

But if the problem keeps reoccurring – and unfortunately, this is the more likely outcome – you need to perform a deeper analysis, and in this situation you should make sure that you get hold of the slow plan before you run sp_recompile, or in some other way alter the procedure. You should keep that slow plan around, so that you can examine it, and not the least find what parameter values the bad plan was built for. This is the topic for this chapter.

Note: If you are on SQL 2016 or later and you have enabled Query Store for your database, you can find all information about the plans in the Query Store views, even after the plans have been flushed. In a later chapter, I present Query Store versions of the queries that appear in this chapter.

Before I go on, a small observation: above I recommended that you should change your preferences in SSMS, so that you by default connect with ARITHABORT OFF to avoid this kind of confusion. But there is actually a small disadvantage with having the same settings as the application: you may not observe that the performance problem is related to parameter sniffing. But if you make it a habit when investigating performance issues to run your problem procedure with ARITHABORT both ON and OFF, you can easily conclude whether parameter sniffing is involved.

Also, I would like to clarify one thing. "Parameter-sniffing problems" is a not wholly accurate term, as the problem is not really the parameter sniffing per se. A better term would be parameter-sensitive queries or parameter-sensitive plans, because that's the root cause of the problems. However, "parameter sniffing" is the term used in general parlance for these types of problems, and I have decided to follow this pattern in this article.

All performance troubleshooting requires facts. If you don't have facts, you will be in the situation that Bryan Ferry describes so well in the song Sea Breezes from the first Roxy Music album:

We've been running round in our present state

Hoping help will come from above

But even angels there make the same mistakes

If you don't have facts, not even the angels will be able to help you. The base facts you need to troubleshoot performance issues related to parameter sniffing are:

Almost all of these points apply to about any query-tuning effort. Only the third point is unique to parameter-sniffing issues. That, and the plural in the second point: you want to look at two plans, the good plan and the bad plan. In the following sections, we will look at these points one by one.

First on the list is to find the slow statement – in most cases, the problem lies with a single statement. If the procedure has only one statement, this is trivial. Else, you can use Profiler to find out; the Duration column will tell you. Either just trace the procedure from the application, or run the procedure from Management Studio (with ARITHABORT OFF!) and filter for your own spid.

Yet another option is to use the stored procedure sp_sqltrace, written by Lee Tudor and which I am glad to host on my website. sp_sqltrace takes an SQL batch as parameter, starts a server-side trace, runs the batch, stops the trace and then summarises the result. There are a number of input parameters to control the procedure, for instance how to sort the output. This procedure is particularly useful to determine the slow statement in a loop, since you get the totals aggregated per statement.

In many cases, you can easily find the query plans by running the procedure in Management Studio, after first enabling Include Actual Execution Plan (you find it under the Query menu). This works well, as long as the procedure does not include a multitude of queries, in which case the Execution Plan tab gets too littered to work with. We will look at alternative strategies in the coming sections.

Typically, you would run the procedure like this:

SET ARITHABORT ON go EXEC that_very_sp 4711, 123, 1 go SET ARITHABORT OFF go EXEC that_very_sp 4711, 123, 1

The assumption here is that the application runs with the default options, in which case the first call will give the good plan – because the plan is sniffed for the parameters you provide – and the second call will run with the bad plan which already is in the plan cache. To determine the cache keys in sway, you can use the query in the section Different Plans for Different Settings to see the cache-key values for the plan(s) in the cache. (If you already have tried the procedure in Management Studio, you may have two entries. The column execution_count in sys.dm_exec_query_stats can help you to discern the entries from each other; the one with the low count is probably your attempt from SSMS.)

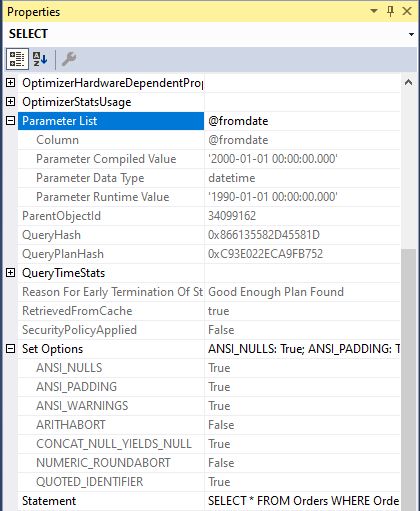

Once you have the plans, you can easily find the sniffed parameter values. Right-click the left-most operator in the plan – the one that reads SELECT, INSERT, etc – select Properties, which will open a pane to the right. (That is the default position; the pane is detachable.) Here is an example how it can look like:

The first Parameter Compiled Value is the sniffed value which is causing you trouble one way or another. If you know your application and its usage pattern, you may get an immediate revelation when you see the value. Maybe you do not, but at least you know now that there is a situation where the application calls the procedure with this possibly odd value. Note also that you can see the settings of some of the SET options that are cache keys.

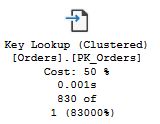

When you look at a query plan, it is far from always apparent what part of the plan that is really costly. But the thickness of the arrows is a good lead. The thicker the arrow, the more rows are passed to the next operator. And if you are looking at an actual execution plan, the thickness is based on the actual number of rows. The graphical plan also offers some useful information for each query operator. As an example, take this Nested Loops operator:

Just below the operator name, you see Cost, which is supposed to convey how much of the cost of the total query that this operator contributes with. My recommendation is that you give this value a miss. The cost is based solely on the estimates, and it has no relation to the actual cost. Least of all if the estimates are inaccurate – and this is not uncommon when you have a performance problem.

Below the cost, you see the execution time. This certainly is a relevant number. You need to keep in mind, though, that the execution time for an operator includes the execution time for the operators to the right sending data into it. Thus, if you see a big difference between an operator and the operators to the right or if, you may be on to something.

Note: Execution times per operator is available from SQL 2014 and up, so if you have SQL 2012 or earlier, you will not see this part.

We looked at the numbers below the execution time in an earlier chapter, but they are important, so it is worth repeating. On top is the actual number of rows, and below that is the estimated number of rows. At the bottom, the actual value is expressed as a percentage of the estimate. When the percentage is high, like 83000 % as in this example, there is a gross misestimate which there is all reason to look into.